I know, I know.

Most artists, even more so if professionals have mixed fealings about AI applied to their field.

I do not. I love new & fresh tools to speed-up my workflow.

With the public release of SD and few iterations of its trained models for free we are impressively close to a technological breakthrough. For good or for worse this is going to define how digital art is done in the future, and I prefer to embrace it than forcing myself in a reactionist stance, as if this would benefit myself in any real way.

These are some of my very early attempts while getting familiar with the strange slang used to write prompts (still a long way to go considering what others are doing)::

Samples

Purple fish eating ラーメン

I guess it is pretty self-descriptive.

A confused Koi

My brother asked me for a black horse riding a red koi in a blue pond. But the language model does not really like to work in this way. In order to get that kind of result some kind of compositing would be needed. Regardless, the results I got a pure nightmare fuel.

An they are not even the worst ones.

Space shrimps & floating castle

Because, why not!

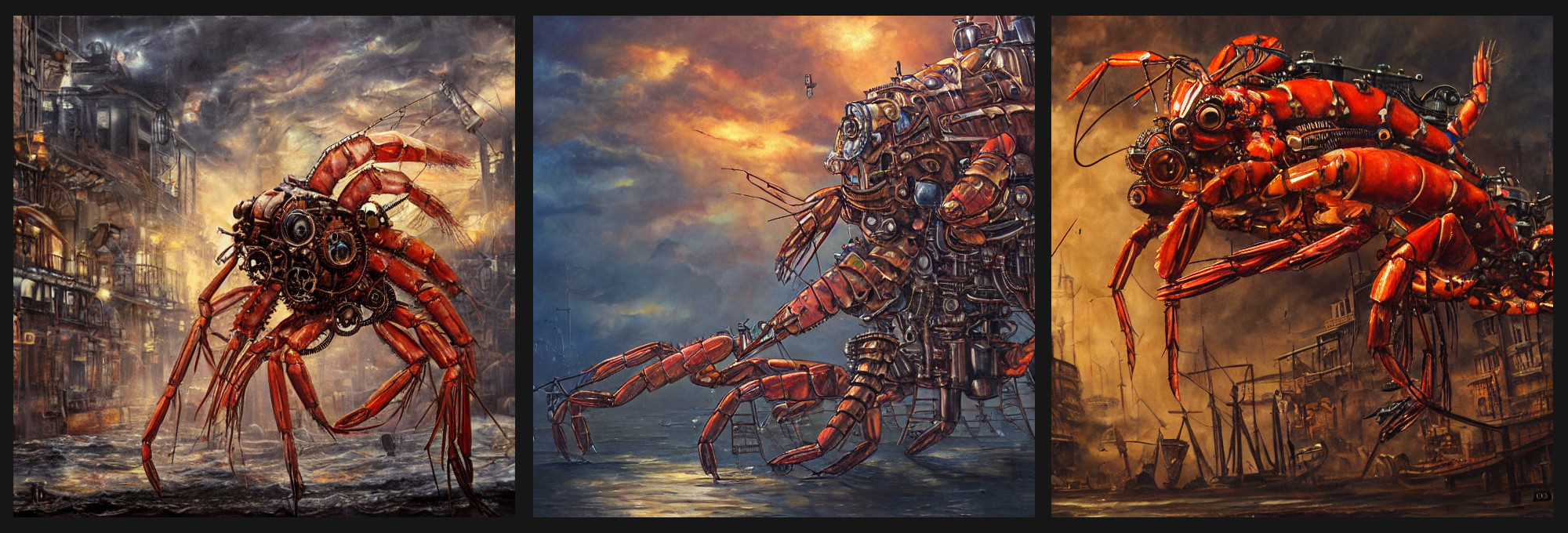

Mechanical shrimps destroying a city

This is how the singularity looks like. Trust me!

Tree on top a head

My first attempt to use SD for vector graphic. I was seriously impressed.

Cyberpunk lorry

Truck in New York as a cyberpunk city. Oil paint.

Spider man!

An other one for which no further comment is needed 🕷

To the future & beyond!

After I got a bit familiar in writing prompts, there are few things I want to test next:

- Tessellation to generate bigger images with higher detail.

- Upscaling via other machine learning techniques (and to fix generated faces).

- Test waifu-diffusion.

- Image compositing, since I often take photos they can match each others pretty nicely.

- Generation of seamless textures for 3d modelling in blender.

I got very good preliminary results and I want to see how deep this rabbit-hole goes.